RFP weighted scoring reminds me of getting grades in school. Some papers and tests come back with an exact score like 96 out of 100 points. On the other hand, I often received more ambiguous evaluations like a letter grade, smiley face or sticker. While these ratings are helpful to track individual progress, if pressed to select the best student in the class, it’s difficult to make a data-based choice based on gold stars.

Similarly, when it comes to RFP scoring — you need to be as objective and precise as possible. Admittedly, this is a challenge when your team needs to interpret text-based answers and then assign them a quantifiable rating. Luckily, making it easier to objectively score complex answers is exactly what weighted RFP scoring is designed for.

This post will explore the ins and outs of RFP weighted scoring. To begin, we’ll explore the basics and benefits of RFP weighted scoring. Next, we’ll go through a step-by-step guide of how to set up weighted scoring to evaluate vendors. Finally, we’ll conclude with several weighted scorecard examples and templates to help you get started.

- RFP weighted scoring basics

- How to set up a weighted scoring model

- RFP weighted scoring example and template

RFP weighted scoring basics

If you need to ensure your RFP process is successful, there are two important areas to explore. The first is learning how to write an RFP and the second is finding the best way to evaluate the resulting proposals.

What is weighted scoring?

Weighted scoring is an approach to request for proposal (RFP) evaluation that uses points and values to calculate the relative importance of various RFP criteria . Unlike simple scoring, which gives all questions equal bearing on the evaluation results, weighted scoring enables the prioritization of decision factors based on their impact on the end-goal.

Ultimately, the weighted scoring model offers a data-based approach to finding the best-fit vendor for your needs.

A weighted scoring scenario

In practice, weighted scoring works by assigning a point value to each RFP question, then an importance, or weight to each RFP section. For example, you may want to evaluate a vendor’s approach, experience, functionality, innovation and cost. However, for this project, it’s most important to select a vendor that offers the best combination of functionality, experience and value.

Weighted scoring enables you to rank your priorities by assigning them a percentage of the total value of the RFP. In this case, your section weighting might look like this.

- Approach – 10%

- Experience – 25%

- Functionality – 30%

- Innovation – 10%

- Cost – 25%

Within each section, you may have any number of questions to help you make your decision. However, this breakdown creates a weighted scorecard that enables you to clearly see which vendor checks the right boxes instead of the most boxes. Basically, it uses a defined scale to “grade” RFP responses and more objectively rank potential suppliers.

How to set up a weighted scoring model

Step 1: Establish your criteria

To leverage weighted scoring successfully, you must prepare long before you have proposals in hand. That means clearly defining your criteria. As you speak with stakeholders, research the market and define goals, create a wishlist to document what your ideal solution looks like. Next, review your list and categorize the requirements by topic.

For example, items in your list may address company background functionality, references and experience, customer success, finances, company policies and so on. Later, you’ll use this organized list to write your RFP questions and sections. But first, you need to rank each item according to its value to the project.

Step 2: Prioritize what’s important

To determine the weight of your RFP sections, you must decide what factors are most important. RFPs contain a wide variety of questions about a multitude of topics. Of course, some pieces of information are standard and receive boilerplate answers, while others are more complex, designed to solicit insightful responses. Starting with your list of requirements, assign each a value. Carefully consider and classify each requirement.

-

- Must have: The solution absolutely must have this element to ensure success of the project

- Nice to have: Enhances or strengthens the offer, but not essential to achieving the stated goal

- Informational: Essential or helpful information, but there is no wrong or right answer

- Not in scope: Not in budget, unneeded or detracts from the desired outcome

Now, look at the items within each section and determine their weight. Sections with several must-have requirements should account for a higher percentage of the value of the proposal.

You may have sections that are purely informational and receive no weight at all. Conversely, you may find that one section of your RFP should account for the majority of the weight. Regardless, be sure to make your section weights add up to 100 percent to simplify final evaluation.

Step 3: Pick your process

There are two primary approaches to weighted scoring: manual and automated. Naturally, both options have pros and cons. Which process you use will depend on your RFP process and investment in digital transformation.

Option A: Manual scoring with weighted scoring spreadsheets

Using Excel spreadsheets, you can implement weighted scoring to almost any RFP. If you only issue a handful of simple RFPs per year, this approach likely meets your needs.

- Advantage: You don’t have to purchase specialized software , it’s an immediate solution and you can find serviceable templates online.

- Disadvantage: To manage the document and troubleshoot issues, you’ll need to be an Excel master. Your spreadsheets will likely use macros and complex formulas to tabulate results. In addition, side-by-side comparisons tend to make these spreadsheets very large and difficult to navigate. It’s also worth considering that you will need to compile feedback from your team manually and summarize your findings for your decision makers.

Unfortunately, many organizations use this approach because it’s “free”. However, it opens your organization up to the risk of miscalculation while discouraging more in-depth analysis. Furthermore, it’s tempting to simplify scoring by generalizing and only rating whole sections, not individual questions — making overall scores less aligned with your goals.

While using Excel spreadsheets to facilitate weighted scoring may be the most common approach, it may not be the most efficient. If your RFP volume is high, or if your team is stretched thin, going digital and adopting an RFP management system can be worth the investment.

Option B: Automated scoring with RFP software

If efficiency and consistency are a priority for your sourcing team, investing in software that enables RFP automation is a smart choice. While RFP solutions deliver value every step of the RFP process, they have several features that make request for proposal evaluation and scoring easier.

First, everything is centralized, which means fewer emails, RFP requirements gathering, real-time progress visibility, workflow management, dynamic templates and automated scoring — all in one place.

Autoscoring allows you to set specific scoring values in your custom question drop-down menus. So when respondents select a certain option from said menu, a default score will be applied.

Advantage: Software saves a significant amount of time throughout the RFP process. Specifically, within the evaluation and scoring phase, it automates much of the process and makes the actual weighing and tabulation of scores infinitely easier and immediate. In addition, engaging stakeholders in the evaluation process is centralized, so you don’t have to track document versions.

Disadvantage: It’s an investment. To deploy RFP software, you’ll need to invest both time and money. Luckily, most RFx solutions are easy to use which makes onboarding relatively fast. In addition, they are generally more affordable than you may think so they deliver an impressive return on investment. If you’re curious to learn more or see it in action, request a demo.

To explore the benefits and value of RFP software, download the free ebook: Measuring the value of RFP software.

Step 4: Carefully create your questions

Whether you decide to go digital or stick with spreadsheets, you’ll find scoring much easier if you write your RFP questions with care. As you begin turning your list of requirements into questions, phrase as many of them as possible in a closed format.

For example, if you decide that 24-7 access to customer success by phone is a must-have requirement, and the response is worth five points, you could phrase your question several ways.

Open vs. closed-ended RFP questions

An open-ended format might be: Describe your approach to customer success. This prompt may result in a variety of responses like ‘Our customer success team is available by phone or email,’ or ‘Our company provides ongoing support throughout the entire onboarding process in addition to quarterly checkpoints.’ Unfortunately, neither of these answers provides the information you needed, so how can you accurately score the response?

Closed-ended questions are much easier to answer and score. A closed-ended version of the question above might be ‘Does your customer success team provide 24-7 support by phone?’ In this case, a response of no receives one point while a response of yes receives five points. In addition to making scoring more objective and clear, when performed in RFP software, these questions are scored automatically.

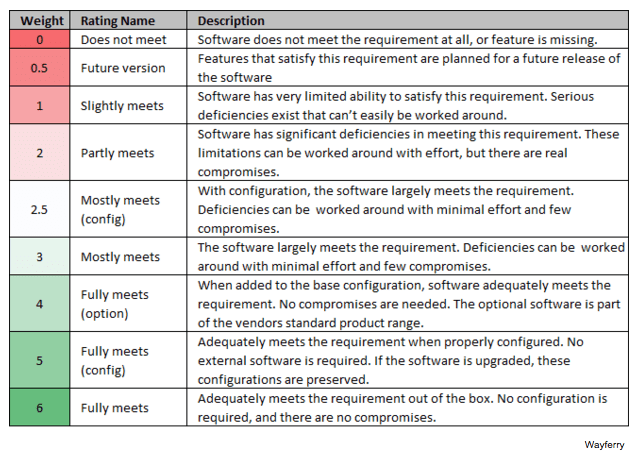

Admittedly, not every question will be able to be phrased in a yes or no format. So, it’s important to be as specific as possible in your question phrasing and provide your scorers guidance. This article from CIO.com explains how to rate requirements for importance and gives a great example of turning “content” into a concrete score (see their example table below).

Essentially, you are:

- Quantifying your priorities

- Clearly defining expectations

- Taking subjectivity out of the equation

Step 5: Calculate and compare

One of the big selling points for weighted scoring is that it makes vendor comparison so much easier. The problem with general section rating percentages is that they’re vague (and highly subjective). Which can make decision making murky.

But when you have exact section and question scores, you can easily compare and feel confident about your selection.

A few RFP weighted scoring best practices

- Reference your requirements discovery documents regularly

- Share your criteria and evaluation plan with vendors

- Establish a question and answer period to allow vendors to submit clarifying questions

- Create a request for proposal evaluation guide and scoring rubric

- Avoid unintentional bias by adjusting scoring visibility to hide company name and scoring results until the conclusion of the evaluation process

- Reconcile any scoring anomalies among stakeholders

- Keep the big-picture project goal in mind when writing, weighting and scoring RFPs

RFP weighted scoring example and template

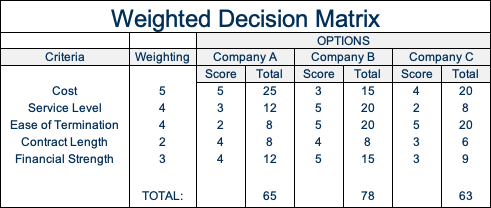

Weighted decision matrix: EPM

Expert Program Management (EPM) created this weighted decision matrix. They explain that it covers an example where a business is evaluating facilities management providers.

As you can see in the weighing column, they placed the greatest value on cost, followed by service level and ease of termination. Finally, they also considered contract length and financial strength.

Here’s what they say about the results:

“Because our most important factor was cost, we might expect the company with the lowest cost to be our preferred option. Interestingly, in the above example, it is actually the company with the highest cost that comes out on top in our decision matrix.

“This is because this company scores much better in all other factors than the cheapest company, meaning this company has a very high service level and is the easiest contract to cancel if things aren’t working out.”

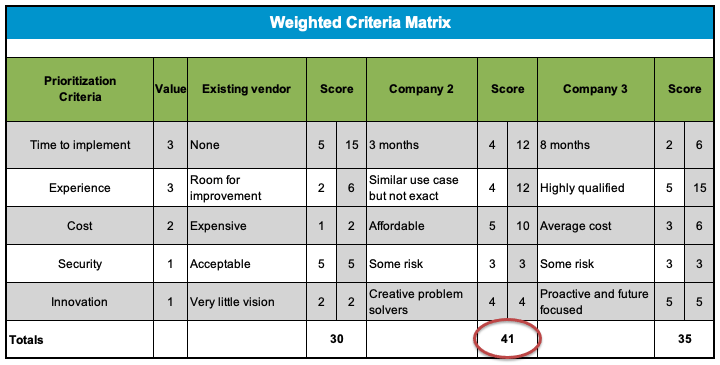

Weighted Criteria Matrix: GoLeanSixSigma.com

This weighted criteria matrix from GoLeanSixSigma.com contains both a fillable template, as well as an example of how the matrix should look once completed.

Below, they explain its use:

“A Weighted Criteria Matrix is a decision-making tool that evaluates potential options against a list of weighted factors. Common uses include deciding between optional solutions or choosing the most appropriate software application to purchase.”

You need to have the right information to make the right decision. Namely, data that is as objective as possible. To get that data, you need to have a game plan not only for what you’re asking, but for how you’re going to make sense of it.

That’s why you need to have a plan to evaluate and score proposals before you publish your RFP. Then, comparing results and picking a winner is easy. So, while setting up weighted scoring takes an initial time investment, it more than pays off in the end.